import matplotlib.pyplot as plt

from matplotlib import animation

import numpy as np

# I'm using seaborn for it's fantastic default styles

import seaborn as sns

sns.set_style("whitegrid")

# BaseStateSystem inlined from ijmbarr/turing-patterns

class BaseStateSystem:

"""

Base object for "State System". We are going to repeatedly visualise

systems which are Markovian: they have a "state", the state evolves

in discrete steps, and the next state only depends on the previous state.

"""

def __init__(self):

raise NotImplementedError()

def initialise(self):

raise NotImplementedError()

def initialise_figure(self):

fig, ax = plt.subplots()

return fig, ax

def update(self):

raise NotImplementedError()

def draw(self, ax):

raise NotImplementedError()

def plot_time_evolution(self, filename, n_steps=30):

"""Creates a gif from the time evolution of a basic state system."""

self.initialise()

fig, ax = self.initialise_figure()

def step(t):

self.update()

self.draw(ax)

anim = animation.FuncAnimation(fig, step, frames=np.arange(n_steps), interval=20)

anim.save(filename=filename, dpi=60, fps=10, writer='pillow')

plt.close()

def plot_evolution_outcome(self, filename, n_steps):

"""Evolves and saves the outcome of evolving the system for n_steps."""

self.initialise()

fig, ax = self.initialise_figure()

for _ in range(n_steps):

self.update()

self.draw(ax)

fig.savefig(filename)

plt.close()Turing Patterns

Adapted from ijmbarr

Turing Patterns

In 1952, Turing published a paper called “The Chemical Basis of Morphogenesis” suggesting a possible mechanism for how a simple set of chemical reactions could lead to the formation of stripes, spots and other patterns we see on animals. It is a surprisingly readable paper that covers some maths, some chemistry, some numerical modelling and ties it all together to try and answer a biological question.

At the core of the paper are a set of partial differential equations known as reaction-diffusion equations. Turing’s analysis shows that in certain regimes systems that obey these equations are unstable to small perturbations, leading to the growth of large scale patterns.

If you have only a basic understanding of chemistry (like I do) this might be surprising. Most chemical reactions I’ve seen involve homogeneous concentrations of reagents, reacting to create the final products, often described by a reaction equation like

\(A + B \rightarrow C + D\)

In this simplified model there is no room for pattern formation, because haven’t included any description about how concentrations of the different chemicals vary in space. Once we take into account these differences, a much richer set of behaviours is possible.

In this post, I am going explore the system described in the paper.

Reaction Diffusion Equations

Reaction-Diffusion equations are a class of partial differential equations whose dynamics are governed by two terms: a diffusion part and a reaction part. We will be looking at the two component case, which takes the form

\(\frac{\partial a(x,t)}{\partial t} = D_{a}\frac{\partial^{2} a(x,t)}{\partial x^{2}} + R_{a}(a(x,t),b(x,t))\)

\(\frac{\partial b(x,t)}{\partial t} = D_{b}\frac{\partial^{2} b(x,t)}{\partial x^{2}} + R_{b}(a(x,t),b(x,t))\)

Where the \(a(x,t)\) and \(b(x,t)\) describe the concentration of chemicals \(a\) and \(b\) at position \(x\) and time \(t\). The functions \(R_{a}\) and \(R_{b}\) determine how the concentrations change due to interspecies reactions and \(D_{a}\) and \(D_{b}\) are the diffusion coefficients.

Diffusion

The diffusion part of the equations causes areas of high concentration to spread out to areas of low concentration, while conserving the total amount of the chemical. To get a feel for what’s happening, let’s focus the equation

$ = D_{a} $

If this looks familiar, it is because it appears in a number of different areas of science and maths. A few are: the diffusion equation, the heat equation and Brownian motion.

It has an analytic solution, which for initial Gaussian distribution is

$ a(x,t) = (-)$

A “spreading out”, or diffusion over time, as the name suggests.

Simulating equations

If you’ve got this far, you might have noticed that this is written in a jupyter notebook. This allows us to mix code with writing, so we don’t just have to look at an equation to understand how it behaves - we can simulate it directly.

To simulate the PDEs, I’m going to use the explicit finite-difference method. It is not the best numerical method to use, but it is easy to code, and provided we keep the time step small enough it will be stable.

Under this scheme, we approximate the time derivative as

\[ \frac{\partial a(x,t)}{\partial t} \approx \frac{1}{dt}(a_{x,t+1} - a_{x,t}) \]

And the spatial part of the derivative (which is usually know as the Laplacian) as

\[ \frac{\partial^{2} a(x,t)}{\partial x^{2}} \approx \frac{1}{dx^{2}}(a_{x+1,t} + a_{x-1,t} - 2a_{x,t}) \]

Putting it all together, for the diffusion part of the equation we get.

\[ a_{x,t+1} = a_{x,t} + dt\left( \frac{D_{a}}{dx^{2}}(a_{x+1,t} + a_{x-1,t} - 2a_{x,t}) \right) \]

Let’s start by defining some functions to take care of the spatial derivatives. We will be using periodic boundary conditions throughout our exploration, which are easy to implement in numpy using its roll function.

def laplacian1D(a, dx):

return (

- 2 * a

+ np.roll(a,1,axis=0)

+ np.roll(a,-1,axis=0)

) / (dx ** 2)

def laplacian2D(a, dx):

return (

- 4 * a

+ np.roll(a,1,axis=0)

+ np.roll(a,-1,axis=0)

+ np.roll(a,+1,axis=1)

+ np.roll(a,-1,axis=1)

) / (dx ** 2)Connection to the matrix form of heat diffusion

Recall from the lecture (day 2) that when we discretized the heat equation on a rod, we ended up with a system of equations:

\[-y_{i-1} + 2y_i - y_{i+1} = f_i\]

which we represented as a matrix multiplication \(My = f\) where \(M\) is the tridiagonal matrix:

\[M = \begin{pmatrix} 2 & -1 & 0 & \cdots & 0 \\ -1 & 2 & -1 & \cdots & 0 \\ 0 & -1 & 2 & \cdots & 0 \\ \vdots & & \ddots & \ddots & -1 \\ 0 & 0 & \cdots & -1 & 2 \end{pmatrix}\]

We just need a way to write this type of matrix quickly and easily. We notice that there’s a pattern to the matrix: each row is like the row above, but shifted by one position to the right. We can use this to build the matrix quickly and easily. We use the function ‘np.roll’ which gives us an array that is shifted by a given number of positions, either to the right or to the left or up or down.

The laplacian1D function above computes exactly the same operation using np.roll: - np.roll(a, 1) shifts the array to get \(a_{i-1}\) values - np.roll(a, -1) shifts the array to get \(a_{i+1}\) values

- The sum np.roll(a,1) + np.roll(a,-1) - 2*a gives us \(a_{i-1} + a_{i+1} - 2a_i\)

The key difference is that np.roll uses periodic boundary conditions (the array wraps around), which is appropriate for simulating reaction-diffusion on a torus. Let’s verify that these two approaches give the same result (in the interior):

# Build the tridiagonal matrix from day_2 lecture

def build_heat_matrix(N):

"""Build the N x N tridiagonal matrix for heat diffusion."""

M = np.zeros((N, N))

for i in range(N):

M[i, i] = -2 # diagonal

if i > 0:

M[i, i-1] = 1 # sub-diagonal

if i < N - 1:

M[i, i+1] = 1 # super-diagonal

return M

# Test with a simple example

N = 8

dx = 1.0

a = np.random.randn(N) # random concentration values

# Method 1: Using the laplacian1D function (np.roll)

lap_roll = laplacian1D(a, dx)

# Method 2: Using matrix multiplication

M = build_heat_matrix(N)

lap_matrix = M @ a / (dx ** 2)

print("Original array a:")

print(a)

print("\nLaplacian via np.roll (periodic BC):")

print(lap_roll)

print("\nLaplacian via matrix multiplication:")

print(lap_matrix)

print("\nThey match in the interior (indices 1 to N-2):")

print(np.allclose(lap_roll[1:-1], lap_matrix[1:-1]))Original array a:

[-1.48276455 -0.34356893 0.81486398 1.05772329 -2.05353289 -0.11058882

0.57477288 -0.15841398]

Laplacian via np.roll (periodic BC):

[ 2.46354619 0.0192373 -0.91557361 -3.35411548 5.05420024 -1.25758237

-1.41854855 -0.59116372]

Laplacian via matrix multiplication:

[ 2.62196016 0.0192373 -0.91557361 -3.35411548 5.05420024 -1.25758237

-1.41854855 0.89160083]

They match in the interior (indices 1 to N-2):

TrueThe results match in the interior! The boundary values differ because: - np.roll wraps around (periodic BC): position 0 gets contribution from position N-1 - Matrix method assumes fixed boundaries: position 0 only sees position 1

For Turing pattern simulations, periodic boundaries are natural (imagine the domain as a torus). The np.roll approach is also much faster than explicit matrix multiplication for large arrays!

To aid in generating visualisations, I’ve written a base class which updates and plots a system based on some notion of state. There’s nothing specific about the reaction diffusion equations encoded in it, so I’m not going to go into any detail about it. You can find the full code for it, along with this notebook on github here. It uses matplotlib’s animation functionality to plot the output as a gif.

All together we can simulate the effect of the diffusion equation:

class OneDimensionalDiffusionEquation(BaseStateSystem):

def __init__(self, D):

self.D = D

self.width = 1000

self.dx = 10 / self.width

self.dt = 0.9 * (self.dx ** 2) / (2 * D)

self.steps = int(0.1 / self.dt)

def initialise(self):

self.t = 0

self.X = np.linspace(-5,5,self.width)

self.a = np.exp(-self.X**2)

def update(self):

for _ in range(self.steps):

self.t += self.dt

self._update()

def _update(self):

La = laplacian1D(self.a, self.dx)

delta_a = self.dt * (self.D * La)

self.a += delta_a

def draw(self, ax):

ax.clear()

ax.plot(self.X,self.a, color="r")

ax.set_ylim(0,1)

ax.set_xlim(-5,5)

ax.set_title("t = {:.2f}".format(self.t))

one_d_diffusion = OneDimensionalDiffusionEquation(D=1)

one_d_diffusion.plot_time_evolution("diffusion.gif")

As expected - the diffusion equation causes the the concentration to “spread out”.

Reaction

Interactions between the two chemical components are introduced via the functions \(R_{a}\) and \(R_{b}\). These functions only depend on the local concentration of each of the chemicals. Their exact form will depend on the chemicals involved, but it is possible to show that Turing patterns are observed for a whole class of different equations.

The one thing we will require from these equations is that they reach a stable equilibrium when the concentrations of the chemicals involved are completely homogeneous. This means that there exists concentrations of \(a\) and \(b\) such that

\(R_a(a_{0}, b_{0}) = 0\)

\(R_{b}(a_{0}, b_{0}) = 0\)

The fact that we require this to hold will make the later instability more suprising.

For the reaction equations, I’m going to use the FitzHugh–Nagumo equation

\(R_a(a, b) = a - a^{3} - b + \alpha\)

\(R_{b}(a, b) = \beta (a - b)\)

Where \(\alpha\) and \(\beta\) are constants.

Let’s see how it behaves

class ReactionEquation(BaseStateSystem):

def __init__(self, Ra, Rb):

self.Ra = Ra

self.Rb = Rb

self.dt = 0.01

self.steps = int(0.1 / self.dt)

def initialise(self):

self.t = 0

self.a = 0.1

self.b = 0.7

self.Ya = []

self.Yb = []

self.X = []

def update(self):

for _ in range(self.steps):

self.t += self.dt

self._update()

def _update(self):

delta_a = self.dt * self.Ra(self.a,self.b)

delta_b = self.dt * self.Rb(self.a,self.b)

self.a += delta_a

self.b += delta_b

def draw(self, ax):

ax.clear()

self.X.append(self.t)

self.Ya.append(self.a)

self.Yb.append(self.b)

ax.plot(self.X,self.Ya, color="r", label="A")

ax.plot(self.X,self.Yb, color="b", label="B")

ax.legend()

ax.set_ylim(0,1)

ax.set_xlim(0,5)

ax.set_xlabel("t")

ax.set_ylabel("Concentrations")

alpha, beta = 0.2, 5

def Ra(a,b): return a - a ** 3 - b + alpha

def Rb(a,b): return (a - b) * beta

one_d_reaction = ReactionEquation(Ra, Rb)

one_d_reaction.plot_time_evolution("reaction.gif", n_steps=50)

The system is stable, and stabilises to \(a = b = \sqrt[3]{\alpha}\).

Full Model

We now have two parts to the reaction diffusion equation: a diffusion term that “spreads” out concentration and a reaction part the equalises the two concentrations. It feels like these two together should create a stable system, so it is surprising that we can end up with patterns.

But enough with the preliminaries, let’s take a look at some of the patterns formed.

def random_initialiser(shape):

return(

np.random.normal(loc=0, scale=0.05, size=shape),

np.random.normal(loc=0, scale=0.05, size=shape)

)

class OneDimensionalRDEquations(BaseStateSystem):

def __init__(self, Da, Db, Ra, Rb,

initialiser=random_initialiser,

width=1000, dx=1,

dt=0.1, steps=1):

self.Da = Da

self.Db = Db

self.Ra = Ra

self.Rb = Rb

self.initialiser = initialiser

self.width = width

self.dx = dx

self.dt = dt

self.steps = steps

def initialise(self):

self.t = 0

self.a, self.b = self.initialiser(self.width)

def update(self):

for _ in range(self.steps):

self.t += self.dt

self._update()

def _update(self):

# unpack so we don't have to keep writing "self"

a,b,Da,Db,Ra,Rb,dt,dx = (

self.a, self.b,

self.Da, self.Db,

self.Ra, self.Rb,

self.dt, self.dx

)

La = laplacian1D(a, dx)

Lb = laplacian1D(b, dx)

delta_a = dt * (Da * La + Ra(a,b))

delta_b = dt * (Db * Lb + Rb(a,b))

self.a += delta_a

self.b += delta_b

def draw(self, ax):

ax.clear()

ax.plot(self.a, color="r", label="A")

ax.plot(self.b, color="b", label="B")

ax.legend()

ax.set_ylim(-1,1)

ax.set_title("t = {:.2f}".format(self.t))

Da, Db, alpha, beta = 1, 100, -0.005, 10

def Ra(a,b): return a - a ** 3 - b + alpha

def Rb(a,b): return (a - b) * beta

width = 100

dx = 1

dt = 0.001

OneDimensionalRDEquations(

Da, Db, Ra, Rb,

width=width, dx=dx, dt=dt,

steps=100

).plot_time_evolution("1dRD.gif", n_steps=150)

A pattern of stripes.

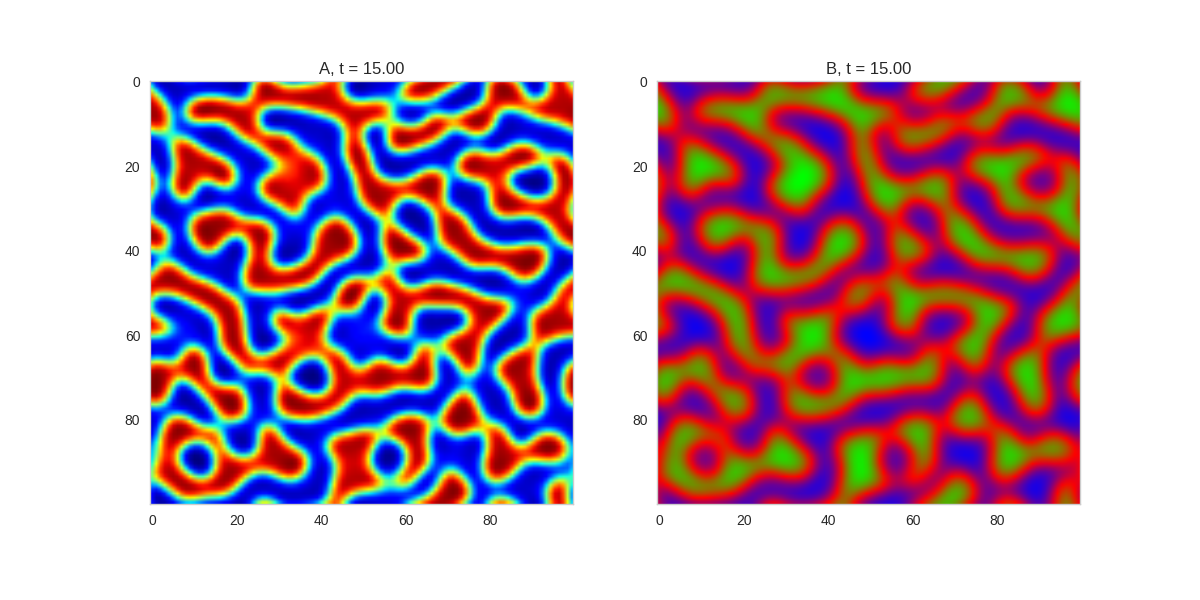

We can see that same behavior in two dimensions:

class TwoDimensionalRDEquations(BaseStateSystem):

def __init__(self, Da, Db, Ra, Rb,

initialiser=random_initialiser,

width=1000, height=1000,

dx=1, dt=0.1, steps=1):

self.Da = Da

self.Db = Db

self.Ra = Ra

self.Rb = Rb

self.initialiser = initialiser

self.width = width

self.height = height

self.shape = (width, height)

self.dx = dx

self.dt = dt

self.steps = steps

def initialise(self):

self.t = 0

self.a, self.b = self.initialiser(self.shape)

def update(self):

for _ in range(self.steps):

self.t += self.dt

self._update()

def _update(self):

# unpack so we don't have to keep writing "self"

a,b,Da,Db,Ra,Rb,dt,dx = (

self.a, self.b,

self.Da, self.Db,

self.Ra, self.Rb,

self.dt, self.dx

)

La = laplacian2D(a, dx)

Lb = laplacian2D(b, dx)

delta_a = dt * (Da * La + Ra(a,b))

delta_b = dt * (Db * Lb + Rb(a,b))

self.a += delta_a

self.b += delta_b

def draw(self, ax):

ax[0].clear()

ax[1].clear()

ax[0].imshow(self.a, cmap='jet')

ax[1].imshow(self.b, cmap='brg')

ax[0].grid(visible=False)

ax[1].grid(visible=False)

ax[0].set_title("A, t = {:.2f}".format(self.t))

ax[1].set_title("B, t = {:.2f}".format(self.t))

def initialise_figure(self):

fig, ax = plt.subplots(nrows=1, ncols=2, figsize=(12,6))

return fig, ax

Da, Db, alpha, beta = 1, 100, -0.015, 10

def Ra(a,b): return a - a ** 3 - b + alpha

def Rb(a,b): return (a - b) * beta

width = 100

dx = 1

dt = 0.001

TwoDimensionalRDEquations(

Da, Db, Ra, Rb,

width=width, height=width,

dx=dx, dt=dt, steps=100

).plot_evolution_outcome("2dRD.png", n_steps=150)(It is possible to animate the formation of these patterns using a similar method call as in the one-dimensional case above. The resulting gifs are quite impressive, but also large, so they are not directly included here. However, if you click on the image it will take you to the animation)

It turns out this behaviour is common to a lot of reaction-diffusion equations, not just the ones presented here.

So how does this happen? To understand, we are going to need to do some maths.

Stability Analysis

Let’s return to the original equations. We’ve seen that for certain conditions, pattern formations occurs.

Unfortunately, solving the equations directly for non-linear reaction functions is often not possible. Instead we can look at what happens when the the system is perturbed slightly from equilibrium.

Linearising the Equations

We start by assuming there is some concentrations, \(a_{0}\) and \(b_{0}\), for which the system is stable. This means that - \(R_{a}(a_{0},b_{0}) = 0\) - \(R_{b}(a_{0},b_{0}) = 0\)

Around these solutions, we look at the time dependence of small perturbations around these values - \(x = a - a_{0}\) - \(y = b - b_{0}\)

And linearise the reaction equations - \(R_{a}(a,b) \approx r_{aa}x + r_{ab}y\) - \(R_{b}(a,b) \approx r_{ba}x + r_{bb}y\)

where \(r_{ij} = \frac{\partial R_{i}}{\partial j}\).

These approximations give us a set of linear equations, written in vector form as

\(\dot{\mathbf{x}} = D\nabla^{2} \mathbf{x} + R \mathbf{x}\)

Where \(R = \left(\begin{matrix} r_{11} & r_{12} \\ r_{21} & r_{22}\end{matrix}\right)\) and \(D = \left(\begin{matrix} D_{a} & 0 \\ 0 & D_{b}\end{matrix}\right)\)

Fourier Transform

If we impose periodic boundary conditions to this equation, a natural solution can be found by applying a Fourier Transformation to \(\mathbf{x}\). If we call the reciprocal coordinate \(k\), and the Fourier transform of \(\mathbf{x}\) as \(\tilde{\mathbf{x}}\), then the transformed equation is

\(\dot{\tilde{\mathbf{x}}} = (R - k^{2}D) \tilde{\mathbf{x}}\)

Which, has solutions of the form

\(\tilde{\mathbf{x}}(t) = \tilde{\mathbf{x}}(0) e^{\omega t}\)

To find \(\omega\) we plug this solution back into our transformed equation to get

\(\omega \tilde{\mathbf{x}} = (R - k^{2}D) \tilde{\mathbf{x}}\)

Showing that \(\omega\) is just the eigenvalue of \((R - k^{2}D)\).

Stability

We now have an equation for the time dependence of our system in the Fourier domain. Using it, we can now discuss what we mean by stability. Our system is considered stable if small perturbations around the homogeneous do not cause it to move further away from the stable solutions.

In terms of our solution, \(\tilde{\mathbf{x}}(0) e^{\omega t}\), stability means that the values of \(\omega\) does not have positive real parts for all values of \(k\). If \(\omega\) is negative, the perturbation will decay away. If \(\omega\) is imaginary, it will oscillate around the stable state. However, if it is positive and real any small perturbation will grow exponentially, until a high order term of the reaction equation becomes important.

To find \(\omega\), we need to solve the equation

$(R - k^{2}D - I) = 0 $

Writing \(J = R - k^{2}D\), this equitation takes the form

\(\omega^{2} - \omega\hbox{Tr}(J) + \hbox{Det}(J) = 0\)

Solving for \(\omega\), we get

\(\omega = \frac{1}{2}(\hbox{Tr}(J) \pm \sqrt{\hbox{Tr}(J)^{2} - 4 \hbox{Det}(J) })\)

Conditions for (in)Stability

From our initial assumption that there was a stable homogeneous state, we require that \(\omega\) has negative real parts where \(k = 0\), which corresponds to the spatially homogeneous solution. For this to be true, we require that

- \(\hbox{Tr}(R) < 0\)

- \(\hbox{det}(R) > 0\)

Or, in terms of the components of \(R\): - \(r_{aa} + r_{bb} < 0\) - \(r_{aa}r_{bb} - r_{ab}r_{ba} > 0\)

For an instability to now occur at finite wavelength, we need one of the following conditions to hold: - \(\hbox{Tr}(J) > 0\) - \(\hbox{det}(J) < 0\)

Because \(\hbox{Tr}(J) = \hbox{Tr}(R) - k^{2}(d_{a} + d_{b})\), the first condition cannot hold for any real \(k\). This means the we require the second to hold, or, after a bit of algebra

\(k^{4}d_{a}d_{b} - k^{2}(d_{a}r_{bb} + d_{b}r_{aa}) + (r_{aa}r_{bb} - r_{ab}r_{ba}) < 0\)

for some real value of \(k\). Once again, we need to solve a quadratic equation. To do this we note that because \(k\) needs to be real, \(k^{2}\) must be positive. This means that at least one root of quadratic equation in \(k^{2}\) needs to be positive and real. This condition is only met when

\(d_{b}r_{aa} + d_{a}r_{bb} > 2\sqrt{d_{a}d_{b}(r_{aa}r_{bb} - r_{ab}r_{ba})} > 0\)

And that’s it. We’ve derived that conditions for the diffusion-reaction equations to be stable to small perturbations.

We can write the complete requirements as

- \(r_{aa} + r_{bb} < 0\)

- \(r_{aa}r_{bb} - r_{ab}r_{ba} > 0\)

- \(d_{b}r_{aa} + d_{a}r_{bb} > 2\sqrt{d_{a}d_{b}(r_{aa}r_{bb} - r_{ab}r_{ba})} > 0\)

Discussion

We’ve waded through a lot of maths to reach this point. Let’s take stock of what we can make of the conditions for instability that we have derived.

Given the diffusion coefficients are positive, from the first and third equation above, we know that \(r_{aa}\) and \(r_{bb}\) have to have different signs, and the component with the negative sign has to be larger. Combining this we the second equation we know that either \(r_{ab}\) or \(r_{ba}\) has to be negative, and the other has to be positive. This two facts show why some systems like this are called activator-inhibitor system: the presence of one component increase the production of itself - the other inhibits the production of both.

With this information, the third equation shows us that the diffusion coefficients need to be different. Specifically, the diffusion coefficient of the inhibitor has to be larger then that of the activator.

Together, this gives us a hand-waving explanation of what might be going on: Consider starting with a small increase in the activator at some point. This in turn creates an increase in the inhibitor at that point. Both of these chemical diffuse to nearby points, but the inhibitor diffuses faster, lowering the activator concentration of nearby points. This lowering of the activator concentration at nearby points, which in turn lowers the inhibitor concentration. The disturbance spreads out like a wave.

It is a lot easier to get a feel for what’s going on once we visualise the this perturbation:

Da, Db, alpha, beta = 1, 100, -0.005, 10

def Ra(a,b): return a - a ** 3 - b + alpha

def Rb(a,b): return (a - b) * beta

def initalise_bump(shape):

a = np.zeros(shape)

if len(a.shape) == 1:

a[int(a.shape[0] / 2)] = 0.3

elif len(a.shape) == 2:

a[int(a.shape[0] / 2), int(a.shape[1] / 2)] = 0.3

return(

a,

np.zeros(shape)

)

width = 100

dx = 1

dt = 0.001

OneDimensionalRDEquations(

Da, Db, Ra, Rb,

initialiser=initalise_bump,

width=width, dx=dx, dt=dt,

steps=250

).plot_time_evolution("1dRD_initial_bump.gif", n_steps=150)

Exploring the Parameter Space

It is interesting to ask how the parameters of the equations affect the pattern formation. To get a feel for what this dependence is, we can investigate the amplitude of the instability for different frequencies. Close to equilibrium, we expect the frequency with the largest real component to dominate the dynamics of the system.

Calculating this value isn’t mathematically difficult: we have an equation of the amplitude \(\omega\) in terms of the frequency \(k\) above. Differentiating an equation of this size by hand can become tedious due to its size, and it is easy to introduce errors. And why carry out simple replacement steps when we have a computer to hand?

In the following, I use the computer algebra system sympy to find the largest real mode of the system

import sympy as sp

sp.init_printing()

# define symbols

a,b,alpha,beta = sp.symbols("a,b,alpha,beta")

Raa, Rab, Rba, Rbb = sp.symbols("Raa, Rab, Rba, Rbb")

Da, Db = sp.symbols("Da, Db")

k2 = sp.Symbol("k2")

# create matricies

R = sp.Matrix(

[[Raa, Rab],

[Rba, Rbb]]

)

D = sp.Matrix(

[[Da, 0],

[0, Db]]

)

J = (R - k2 * D)

# define our solution for omega

omega = sp.Matrix.trace(J) + sp.sqrt(sp.Matrix.trace(J) ** 2 - 4 * sp.Matrix.det(J))

omega\(\displaystyle - Da k_{2} - Db k_{2} + Raa + Rbb + \sqrt{- 4 Da Db k_{2}^{2} + 4 Da Rbb k_{2} + 4 Db Raa k_{2} - 4 Raa Rbb + 4 Rab Rba + \left(- Da k_{2} - Db k_{2} + Raa + Rbb\right)^{2}}\)

# find the maximum value of omega for k^{2}

omega_prime = sp.diff(omega, k2)

sols = sp.solvers.solve(omega_prime, k2)

max_k2 = sols[0]

max_k2\(\displaystyle \frac{Da Db Raa - Da Db Rbb - Da \sqrt{- Da Db Rab Rba} - Db \sqrt{- Da Db Rab Rba}}{Da Db \left(Da - Db\right)}\)

def get_length_scale(al, be, da, db):

# rewritng in terms of Da, Db, alpha, beta

Ra = a - a**3 - b + alpha

Rb = beta * (a - b)

# we expand around the homogenius steady state

zero_subs = [

(a, sp.sign(alpha) * sp.cbrt(sp.Abs(alpha))),

(b, sp.sign(alpha) * sp.cbrt(sp.Abs(alpha)))

]

# and linearise

R_prime_subs = {

(Raa, sp.diff(Ra, a)),

(Rab, sp.diff(Ra, b)),

(Rba, sp.diff(Rb, a)),

(Rbb, sp.diff(Rb, b))

}

# putting in our numeric values we get

vals = [

(alpha, al),

(beta, be),

(Da, da),

(Db, db)

]

# substitute it all in to find the maximum k

max_k2 = [sp.N(

sol

.subs(R_prime_subs)

.subs(zero_subs)

.subs(vals)

) for sol in sols]

k2 = max(sp.re(sym) for sym in max_k2)

# if k2 is negative, no real solution exists

if k2 <= 0:

return -1

# convert k2 into length units

return sp.N(2 * sp.pi / sp.sqrt(k2))

get_length_scale(-0.005, 10, 1, 100)\(\displaystyle 13.6336345738685\)

Which looks about right when we compare it to our simulations above - the spatial variation is about 14 length units.

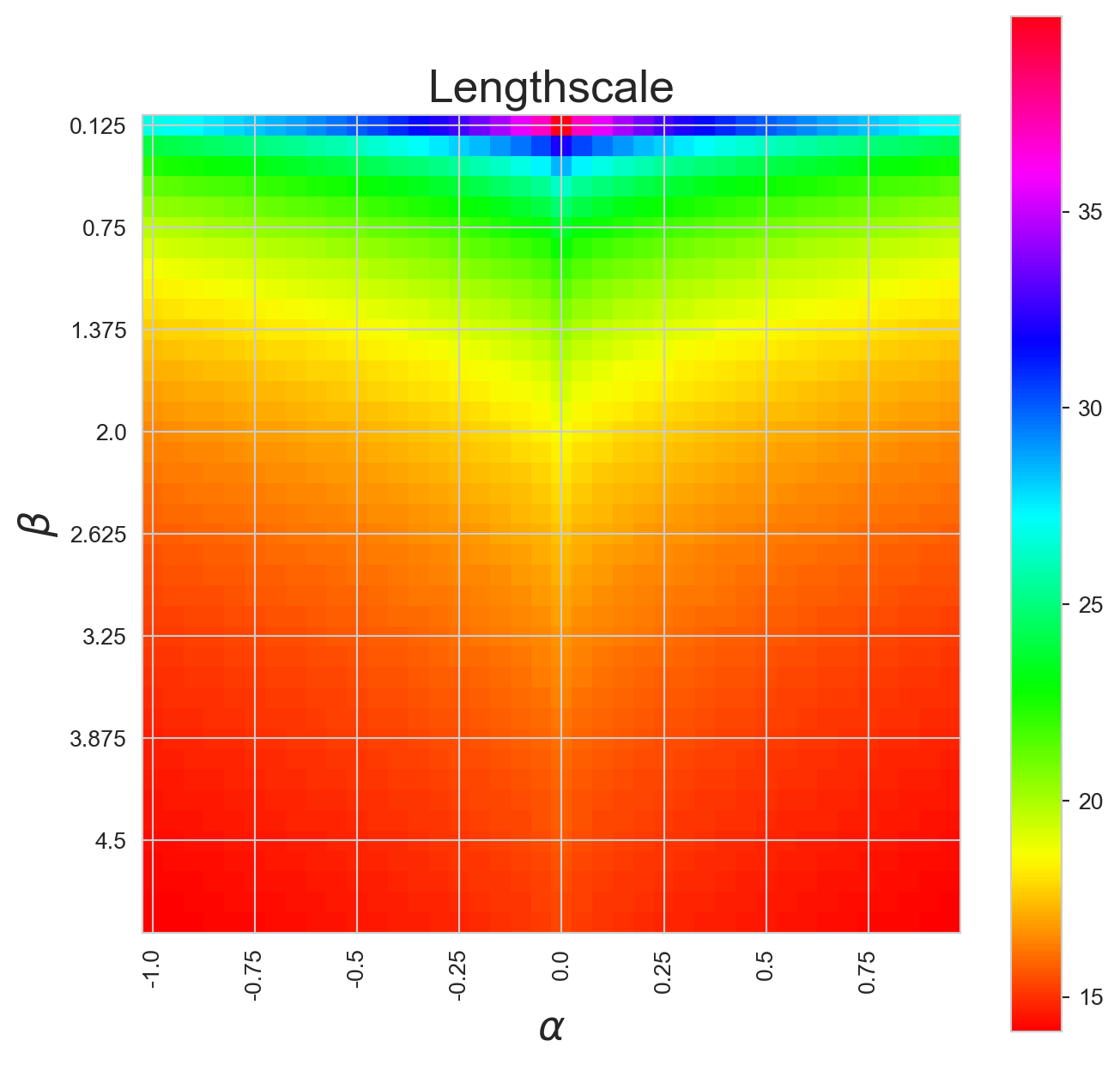

Let’s use it to see how the length scale of the system changes as a function of \(\alpha\) and \(\beta\):

betas = [(1 + i) * 0.125 for i in range(0,40)]

alphas = [i * 0.05 for i in range(-20,20)]

outcomes = np.zeros((len(betas), len(alphas)))

for x,al in enumerate(alphas):

for y,be in enumerate(betas):

outcomes[y,x] = get_length_scale(al, be, 1, 100)

plt.figure(figsize=(8,8))

o = outcomes

o[outcomes == 0] = -1

plt.imshow(o, cmap="hsv", interpolation="none")

plt.colorbar()

plt.xticks(*zip(*[(n,l) for n,l in enumerate(alphas) if n % 5 == 0]), rotation=90)

plt.yticks(*zip(*[(n,l) for n,l in enumerate(betas) if n % 5 == 0]), rotation=0)

plt.title("Lengthscale", fontsize=20)

plt.xlabel(r"$\alpha$", fontsize=18)

plt.ylabel(r"$\beta$", fontsize=18);

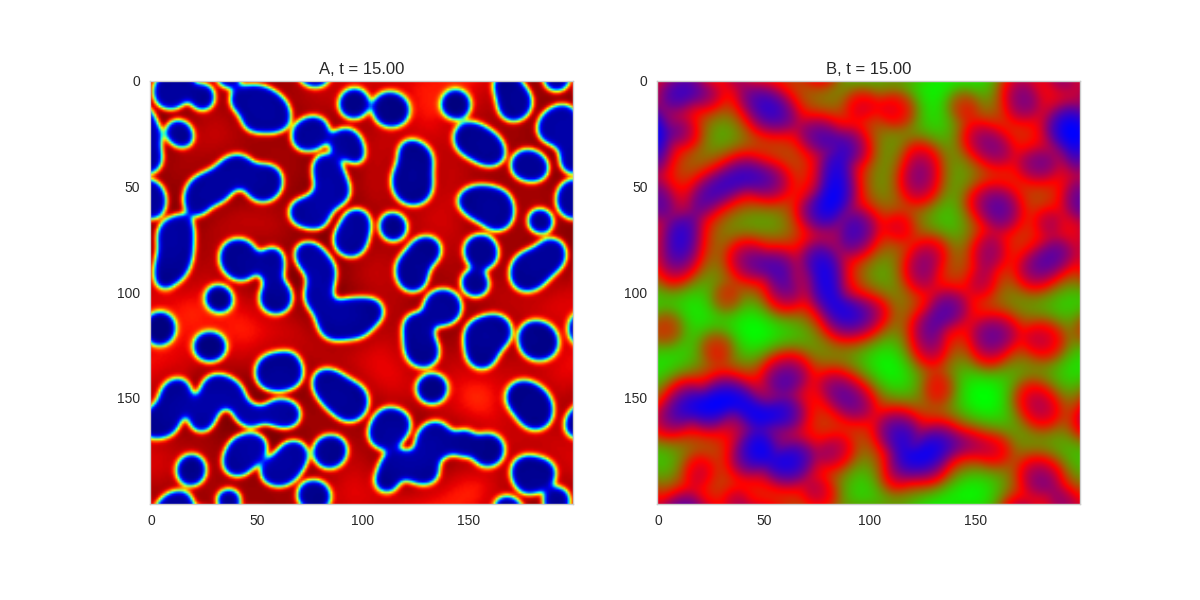

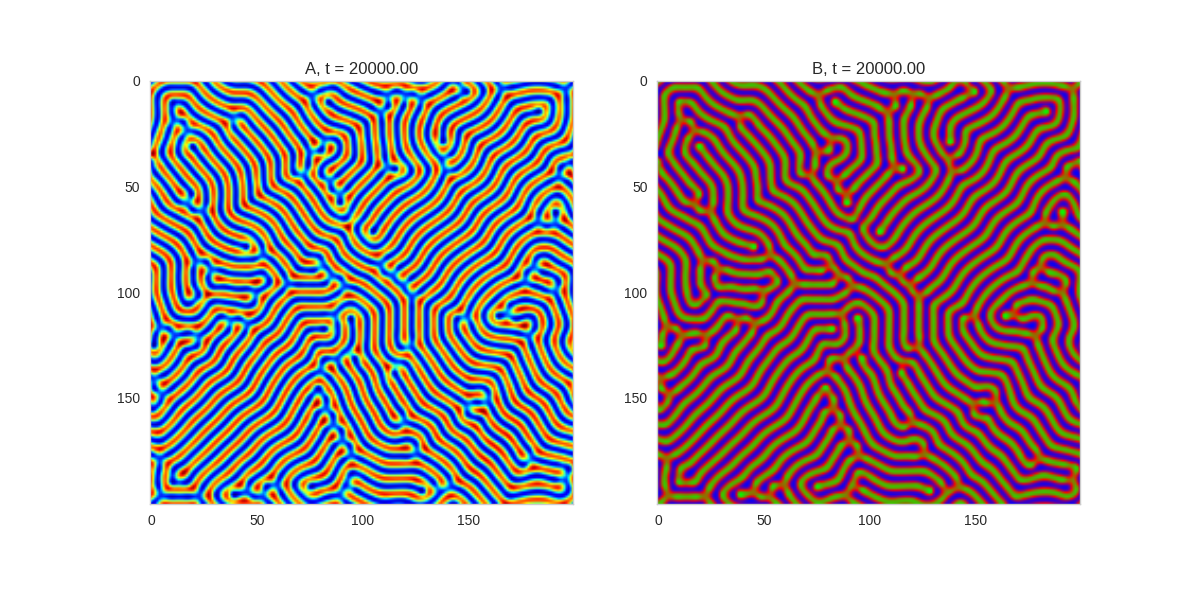

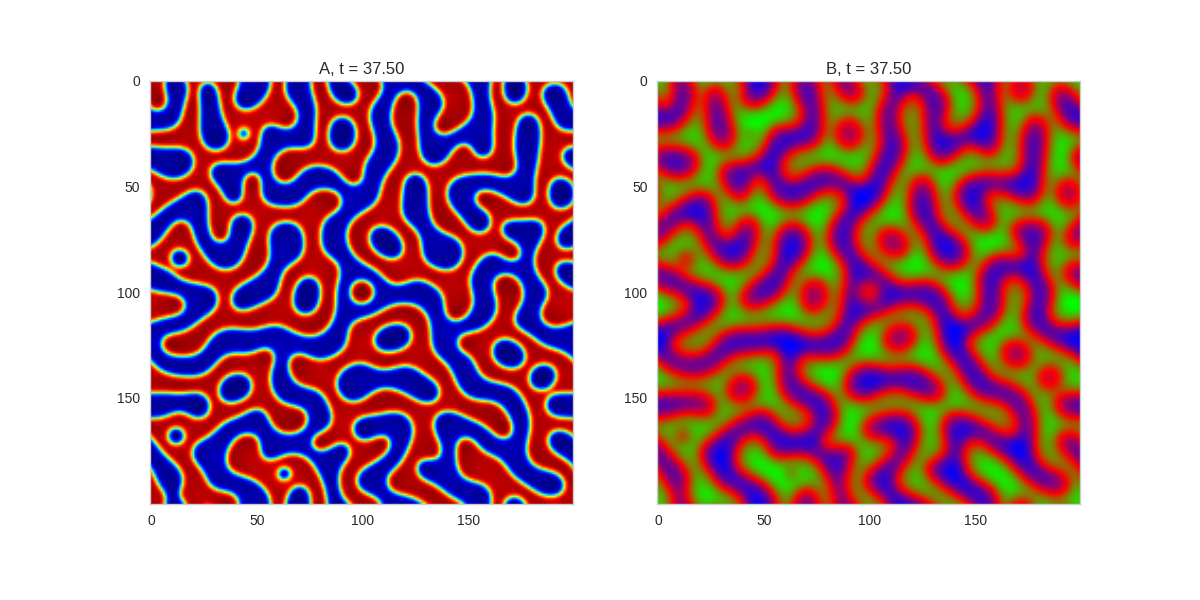

And take a look at the long length scale we should see when we use a low value of \(\beta\):

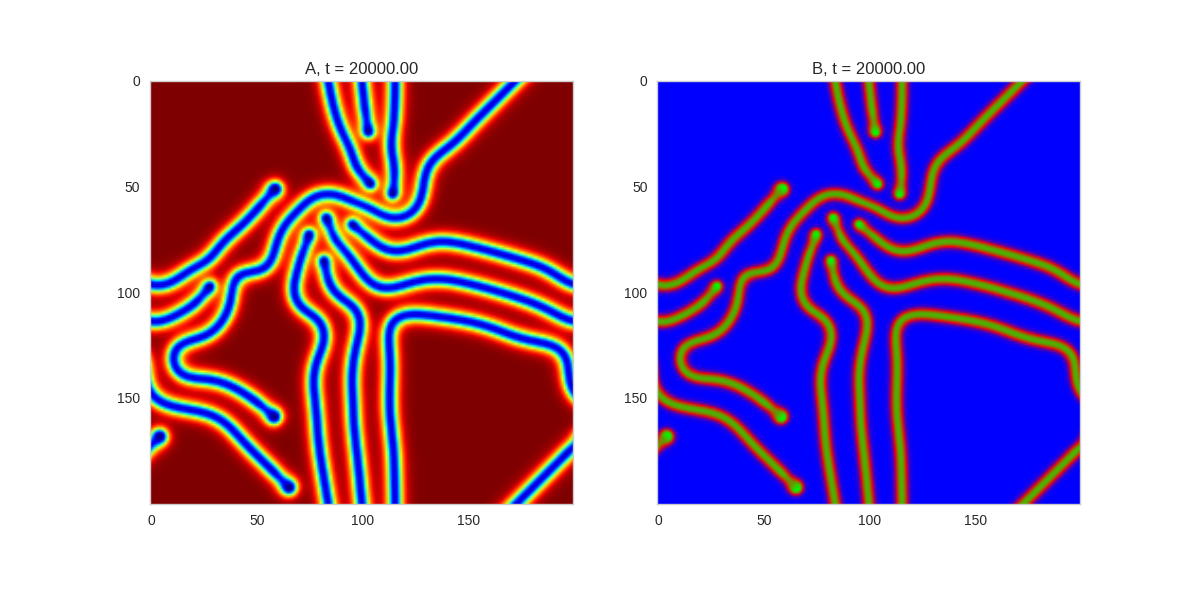

Da, Db, alpha, beta = 1, 100, 0.01, 0.25

def Ra(a,b): return a - a ** 3 - b + alpha

def Rb(a,b): return (a - b) * beta

width = 200

dx = 1

dt = 0.001

TwoDimensionalRDEquations(

Da, Db, Ra, Rb,

width=width, height=width,

dx=dx, dt=dt, steps=150

).plot_evolution_outcome("2dRD_small_beta.png", n_steps=100)Initial Conditions

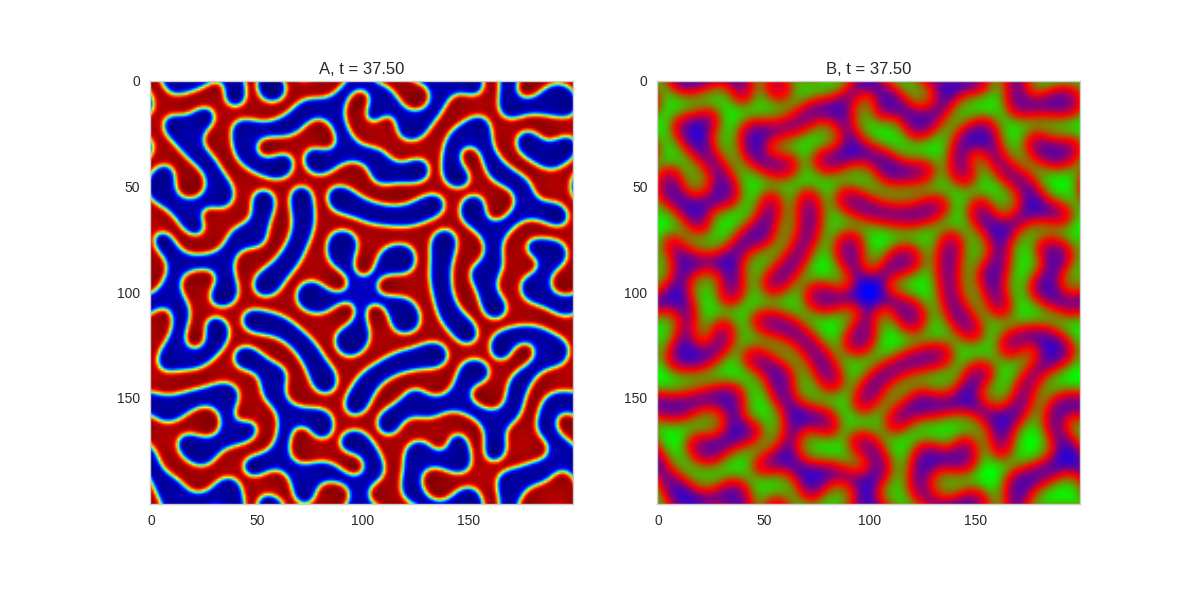

Adjusting the parameters gives us control over the length scale of the system. We can also gain some control over the system by adjusting the initial conditions of the system. For example, we can impose symmetries on the system by making the initial conditions symmetric:

from scipy.ndimage.interpolation import rotate

def average_rotate(a, degree):

"""

Takes a 2d array a, and produces the average arrays,

having rotated it degree times. The resulting shape

has approximate degree-fold rotational symmetry.

"""

theta = 360 / degree

a = np.mean([rotate(a, theta * i, reshape=False) for i in range(degree)], axis=0)

return a

def random_symmetric_initialiser(shape, degree):

"""

Random initialiser with degree-fold symmetry.

"""

a = np.random.normal(loc=0, scale=0.05, size=shape)

b = np.random.normal(loc=0, scale=0.05, size=shape)

return (

average_rotate(a, degree),

average_rotate(b, degree)

)

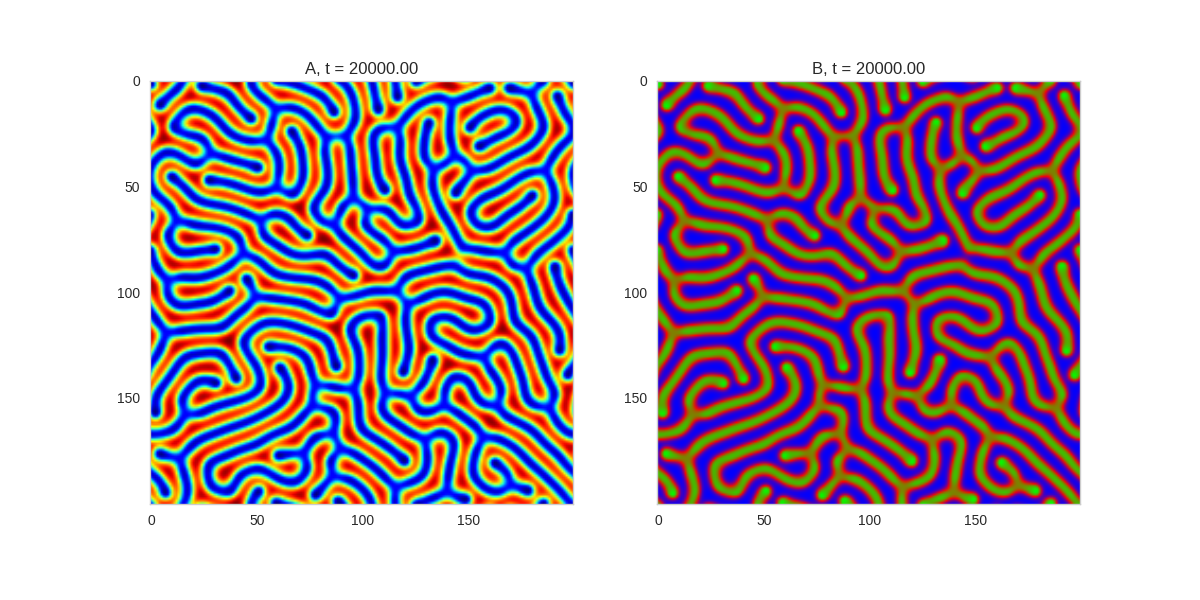

Da, Db, alpha, beta = 1, 100, 0.01, 1

def Ra(a,b): return a - a ** 3 - b + alpha

def Rb(a,b): return (a - b) * beta

width = 200

dx = 1

dt = 0.001

# three fold

three_fold_initialiser = lambda shape: random_symmetric_initialiser(shape, 3)

TwoDimensionalRDEquations(

Da, Db, Ra, Rb,

initialiser=three_fold_initialiser,

width=width, height=width,

dx=dx, dt=dt, steps=250

).plot_evolution_outcome("2dRD_3_fold_sym.png", n_steps=150)

# five fold

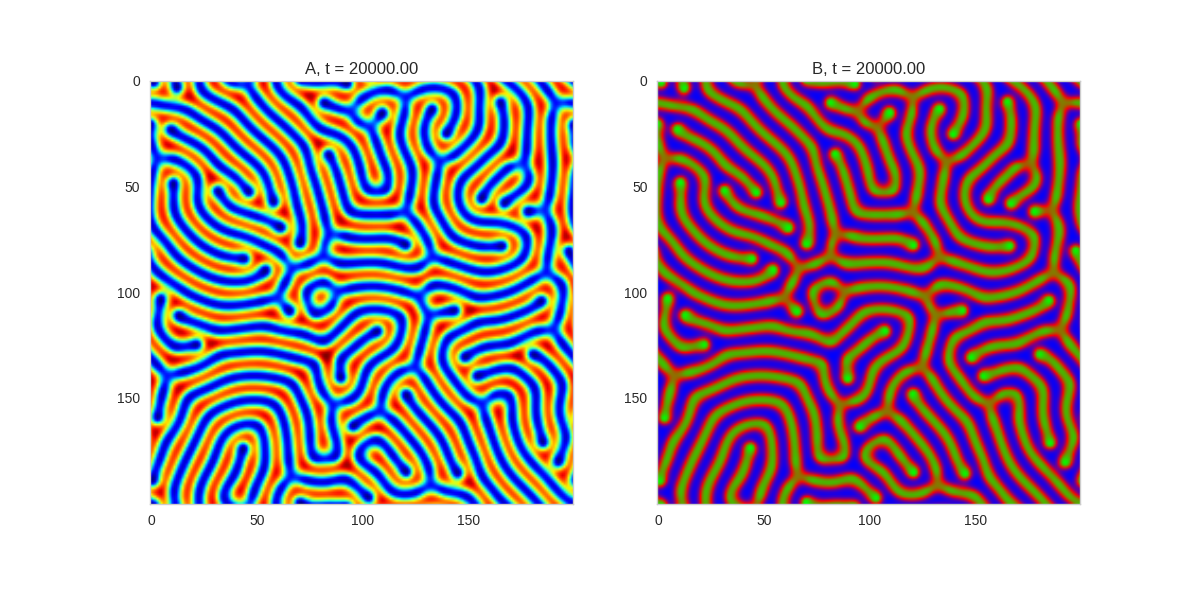

five_fold_initialiser = lambda shape: random_symmetric_initialiser(shape, 5)

TwoDimensionalRDEquations(

Da, Db, Ra, Rb,

initialiser=five_fold_initialiser,

width=width, height=width,

dx=dx, dt=dt, steps=250

).plot_evolution_outcome("2dRD_5_fold_sym.png", n_steps=150)/var/folders/xk/ff6yqns95pz6qvvl9b0lq01w0000gq/T/ipykernel_82883/2381211232.py:1: DeprecationWarning:

Please use `rotate` from the `scipy.ndimage` namespace, the `scipy.ndimage.interpolation` namespace is deprecated.

Three fold rotational symmetry:

Five fold rotational symmetry:

The symmetry isn’t perfect due to implementing it one a square grid, but it works quite well.

Spatial Effects

Another interesting extension to these systems is to see what happens when we allow the parameters of the system to vary in space. In our implementation, this is just a matter of making the parameters a grid as well:

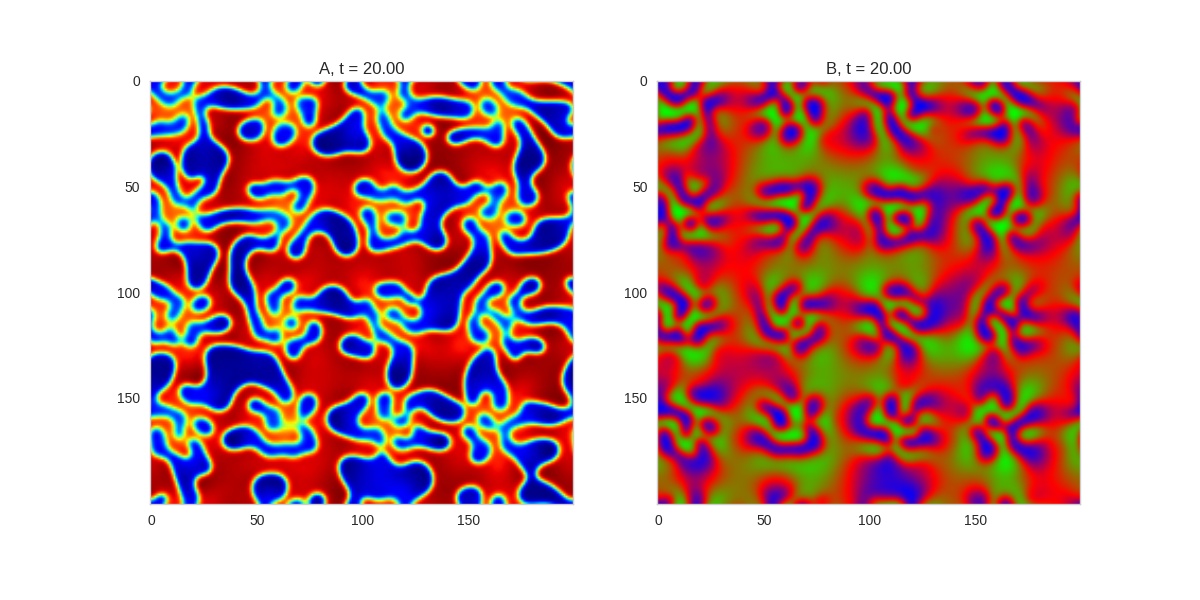

Da, Db, alpha, beta = 1, 100, 0.01, 10

width = 200

dx = 1

dt = 0.001

x,y = np.mgrid[0:width,0:width]

beta = 0.1 + 5 * (1 + np.sin(2 * np.pi * y / 50)) * (1 + np.sin(2 * np.pi * x / 50))

def Ra(a,b): return a - a ** 3 - b + alpha

def Rb(a,b): return (a - b) * beta

TwoDimensionalRDEquations(

Da, Db, Ra, Rb,

width=width, height=width,

dx=dx, dt=dt, steps=100

).plot_evolution_outcome("2dRD_spatial.png", n_steps=200)Similar Systems

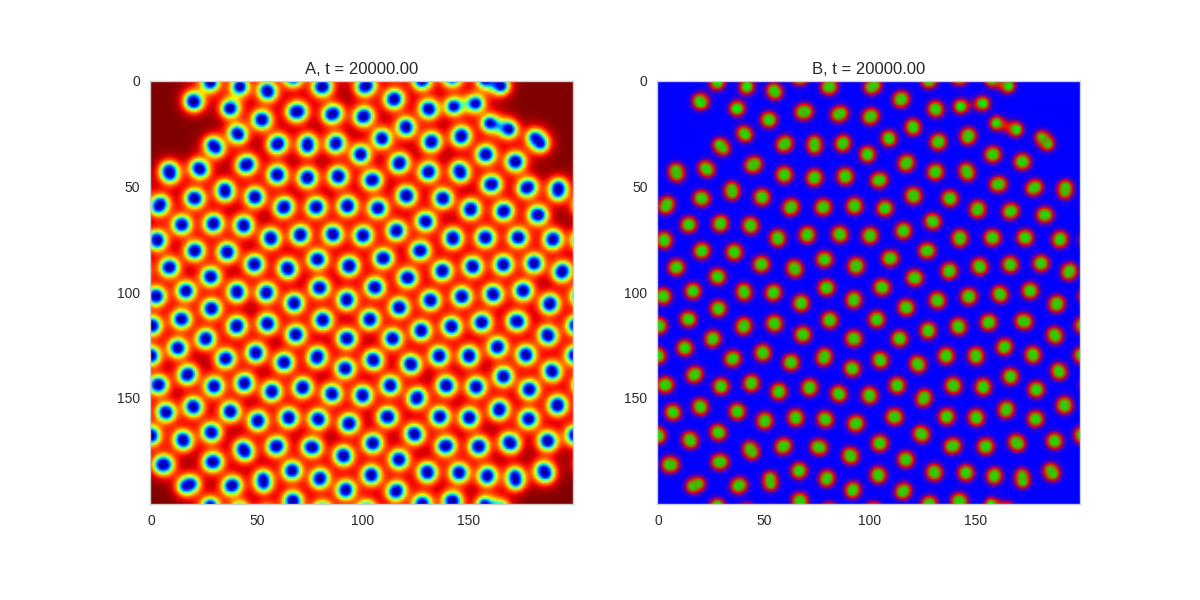

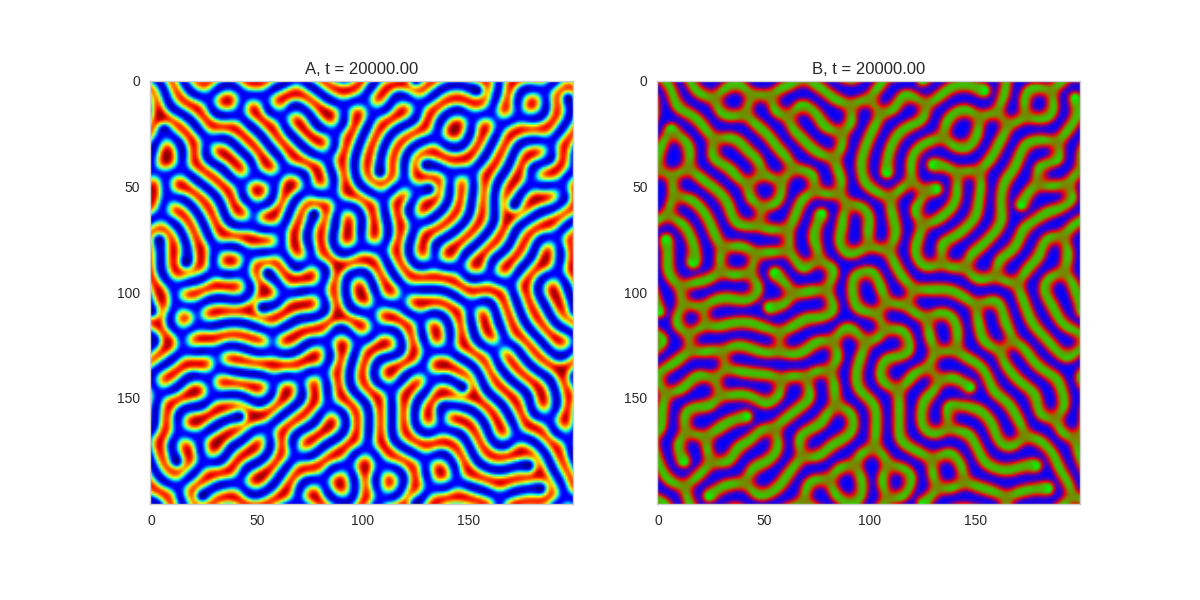

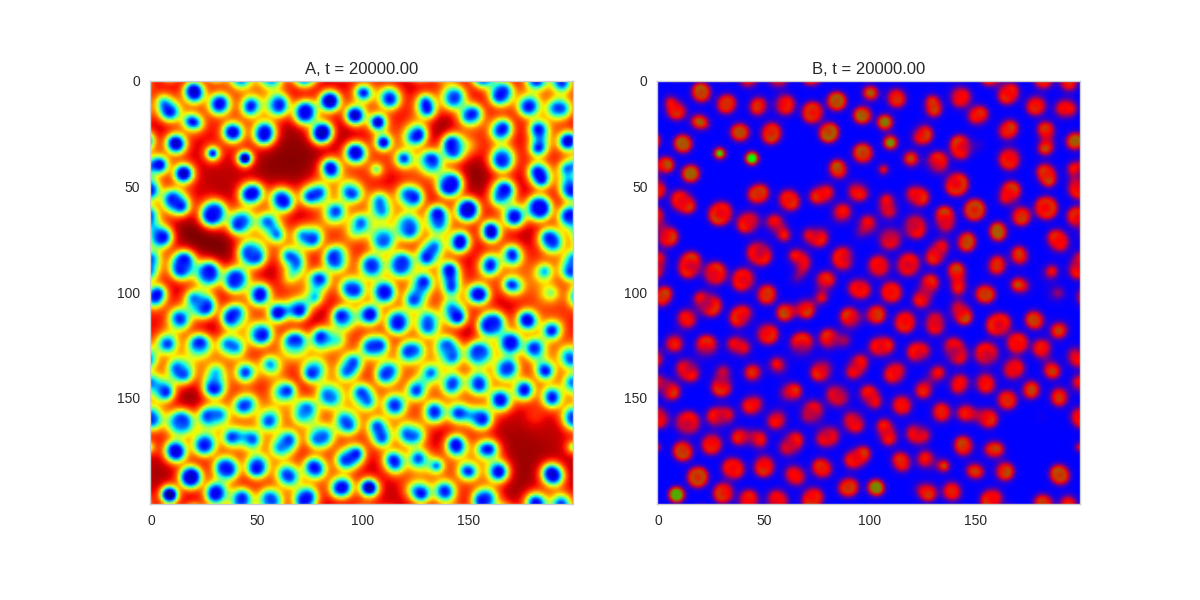

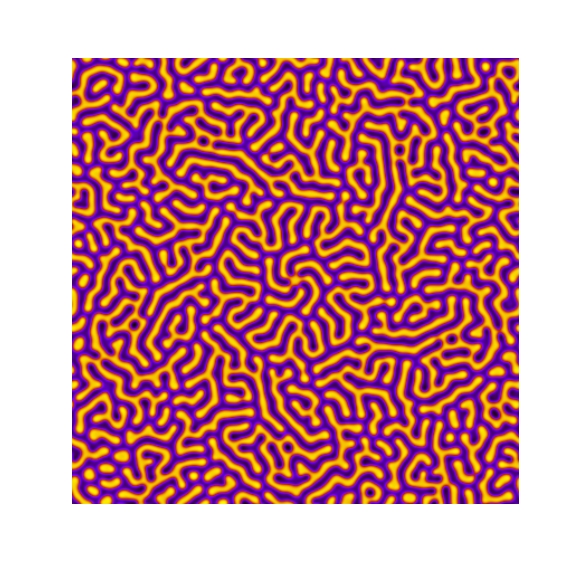

Turing patterns are only one part of pattern generation from non-linear PDEs. Given we have the tools to explore these systems, it is worth looking at another simple system in which pattern formation occurs: the Gray-Scott equations. As described in the paper Complex Patterns in a Simple System, for an interesting set of initial conditions the Gray-Scott equations result in a range complex patterns being formed.

While these equations are reaction-diffusion equations, the patterns that end up being formed are not from Turing instabilities. Instead they come from the initial conditions of the system: a small region in the centre of the region is perturbed. As this perturbation spreads out, strange patterns are formed.

To visualise it, we just need to pass another initialisation function of our previous class, and put in new reaction equations. The parameters for the interesting systems are taken from here. I’m not currently aware of any theory that gives a full explanation of these patterns.

def gs_initialiser(shape):

a = np.ones(shape)

b = np.zeros(shape)

centre = int(shape[0] / 2)

a[centre-20:centre+20,centre-20:centre+20] = 0.5

b[centre-20:centre+20,centre-20:centre+20] = 0.25

a += np.random.normal(scale=0.05, size=shape)

b += np.random.normal(scale=0.05, size=shape)

return a,b

# interesting parameters taken from http://www.aliensaint.com/uo/java/rd/

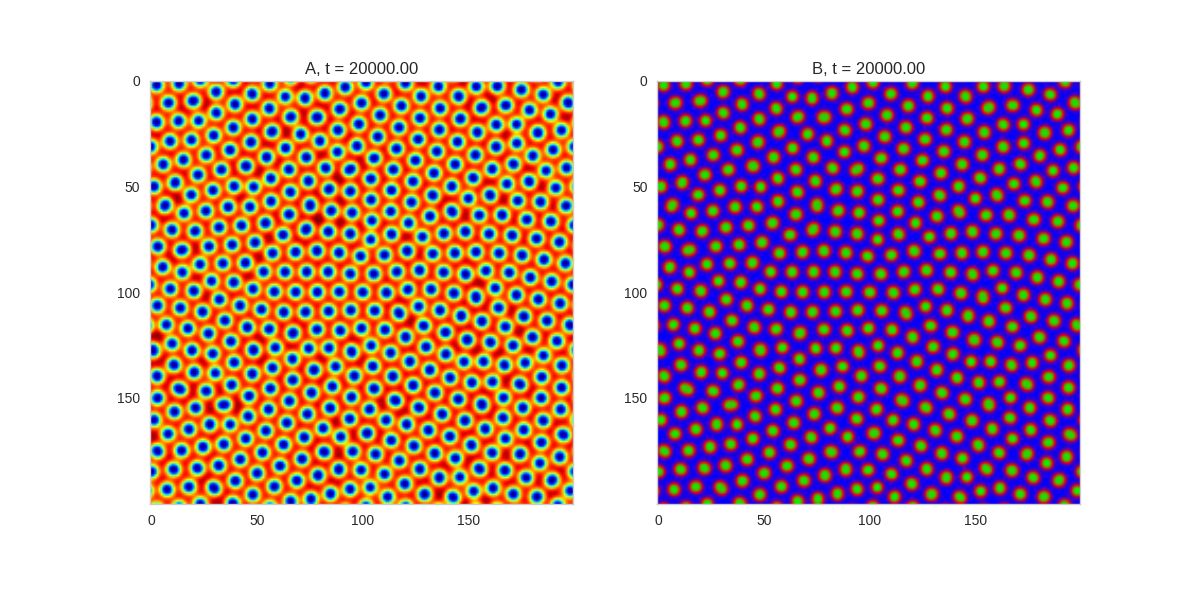

params = [

[0.16, 0.08, 0.035, 0.065],

[0.14, 0.06, 0.035, 0.065],

[0.16, 0.08, 0.06, 0.062],

[0.19, 0.05, 0.06, 0.062],

[0.16, 0.08, 0.02, 0.055],

[0.16, 0.08, 0.05, 0.065],

[0.16, 0.08, 0.054, 0.063],

[0.16, 0.08, 0.035, 0.06]

]

for i, param in enumerate(params):

Da, Db, f, k = param

def Ra(a,b): return - a*b*b + f*(1-a)

def Rb(a,b): return + a*b*b - (f+k)*b

width = 200

dx = 1

dt = 1

TwoDimensionalRDEquations(

Da, Db, Ra, Rb,

initialiser=gs_initialiser,

width=width, height=width,

dx=dx, dt=dt, steps=200

).plot_evolution_outcome("gs_{}.png".format(i), n_steps=100)Conclusion

It’s been interesting to play with these non-linear systems. One of the main challenges I’ve found with looking at them is how you find “interesting” parameters. I have some ideas about how we might search for these automatically, but that will have to wait for another post.

The complexity that can arise from these simple systems surprised me at fist, but it has been shown that it is possible to encode cellular automata in reaction diffusion systems. This suggests that the patterns formed by reaction-diffusion systems can be as complex as those produced by any Turing-complete system, and that any attempt to provide a quantitative explanation for their full behaviour will eventually run into some undecidable statement.

And with that connection between Turing’s work on reaction-diffusion equations and his more well known work on computability, I think it’s time to end this post.

Code

This post was created from a jupyter notebook. You can find it here.